Why this matters right now

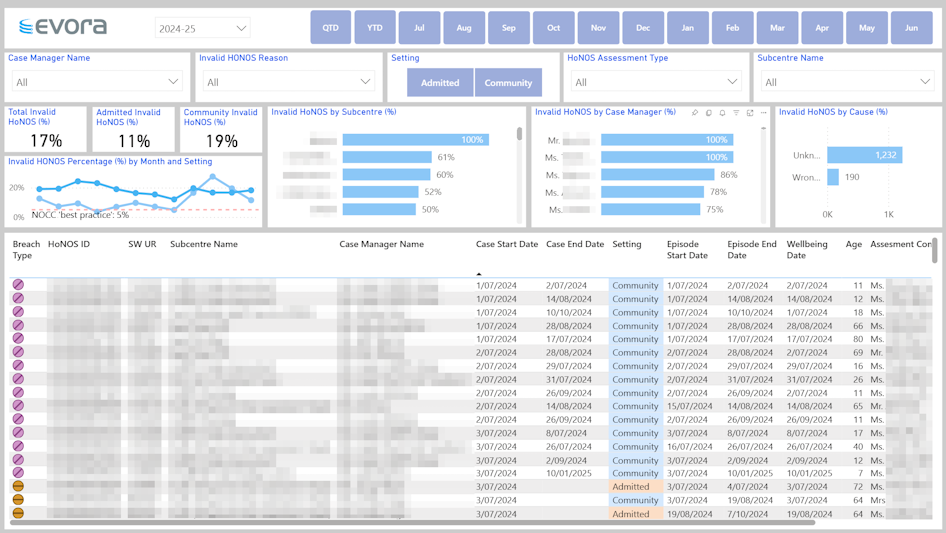

Across Australia, mental-health clinicians record millions of Health of the Nation Outcome Scales (HoNOS) ratings each year. A quick look at a partnering health service's de-identified 2024-25 dashboard screenshot below tells a confronting story: almost one in five assessments (17 %) failed validity checks. Unknown assessment scale rating alone generated 1,232 faulty records.

Poor-quality HoNOS data is more than an annoying admin task; it dilutes clinical insight, wastes staff time and puts both funding and accreditation at risk. The upside? Every percentage-point lift in data quality returns dividends right across the health service.

1. Setting the scene – what HoNOS is for

HoNOS forms the backbone of the National Outcomes and Casemix Collection (NOCC), the mandatory dataset that states and territories submit to the Commonwealth each quarter. The latest NOCC technical specs, effective 1 July 2024, lift the benchmark for both completeness and accuracy. Many states have already tied HoNOS directly to the Australian Mental Health Care Classification (AMHCC) – the activity-based funding mechanism for mental-health care across care settings: admitted, community and residential. Put bluntly: shaky HoNOS data has real funding impact.

Clinically, the scales enjoy robust validity and reliability in Australian settings. When recorded correctly, they give teams a shared language for tracking symptom change, tailoring treatment and benchmarking results across sites. The catch? They only work if they’re right.

2. The triple-win case for quality

Improving HoNOS data delivers benefits on three fronts:

- Clinical gains – Reliable symptom trajectories allow early flagging of deterioration, smoother hand-offs between inpatient and community teams, and genuinely personalised care plans.

- Administrative wins – Fewer records need chasing, end-of-month reporting accelerates and cross-team comparisons can be trusted. Hours saved on data-cleaning flow straight back to high-value tasks.

- Regulatory & funding security – Clean data meets NOCC thresholds, feeds AMHCC weightings accurately and keeps your service in the green on state performance scorecards. That protects revenue and strengthens accreditation evidence.

Victorian mental-health performance reports, for instance, now publish site-level metrics on outcome-measure completeness. Public scrutiny is only heading in one direction. (Health Victoria) Mental health performance reports

3. Diagnosing the problem – what the data tells us

A single dashboard (see Figure 1) reveals the anatomy of the issue. Five visuals are particularly telling:

- Invalid HoNOS % by Month & Setting – A 12-month trend line, split by Admitted versus Community. Seasonal spikes (e.g. during holiday leave) and setting-specific pain points jump out instantly.

- Invalid HoNOS by Sub-centre – A horizontal bar chart where one unit tops out at 100 % invalid. That pinpoints exactly where coaching or system fixes are urgently needed.

- Invalid HoNOS by Case Manager – Clinicians are ranked from perfect to problematic. Targeted feedback beats blanket training every time.

- Invalid HoNOS by Cause – Unknown assessment scale rating dominates, followed by Wrong assessment type. Knowing the cause guides the solution.

- Record-level Drill-through – UR numbers, dates, setting and episode links let quality officers correct or exclude faulty records on the spot.

Read together, these visuals expose not just that errors exist but why they occur and who can fix them.

4. Five practical strategies to lift data quality

1. Establish real-time feedback loops

Send each clinician a weekly snapshot of their valid-rating percentage, benchmarked against the team median. Behavioural-economics research shows most people move toward the norm once they can see it.

2. Run short, scenario-based refresher sessions

The national training material is solid, but outliers learn faster when they see their own data. Turn the “top and bottom five” chart into live case studies, pairing strong and struggling raters for peer-to-peer learning.

3. Co-design smart forms with clinicians

Build forms that block invalid score ranges, auto-populate assessment type from the care setting and refuse to save if mandatory items are blank. Sprinkle in brief “why this matters” tool-tips so the rationale is never lost.

4. Tighten governance and stewardship

Nominate a HoNOS data steward who chairs a monthly “outcomes huddle”, reviews the dashboard, allocates corrective actions and tracks progress. Make data stewardship a named responsibility, not a side hustle.

5. Link quality to funding at executive level

Model how even a five-point lift in valid ratings could translate into extra activity-based funding under forthcoming national specifications.

When quality improvements equate to budget protection, the C-suite listens.

5. Measuring success – what good looks like

Aim for four headline indicators:

- Invalid HoNOS rate below 5 % (down from the current 17 %). That aligns with best-practice NOCC completeness bands. (AMHOCN’s NOCC Volume & Completeness reports methodology)

- Unknown-cause errors under 20 % of all invalid records, signalling that most issues are now categorised and being addressed.

- Variation across sub-centres narrowed to less than ten percentage points. Consistency is a patient-safety issue as much as an efficiency one.

- Average turnaround time for correcting an invalid record below 14 days so dashboards drive near-real-time decisions.

Publish these metrics on a Data Quality scorecard. It telegraphs that outcome-measure quality is a clinical-risk issue, not just an administrative nuisance.

6. What happens when you get it right?

- Clinicians begin trusting trend lines instead of noting every score, which speeds up case reviews and discharge planning.

- Managers reclaim hours once spent on data-cleaning and reinvest them in service redesign or staff supervision.

- Executives negotiate and plan confidently because funding weightings now reflect true case complexity.

- Regulators see your service in the green zone for NOCC compliance, smoothing accreditation and audit cycles.

Services that have cut their invalid rate in half report that multidisciplinary treatment-plan reviews happen up to a week sooner because teams walk in with reliable baseline scores.

7. A quick plan you can start tomorrow

- Open your dashboard – Run the invalid-rate by clinician and share the unvarnished truth.

- Host a 30-minute raters’ huddle – Crowd-source practical fixes such as swapping quick reference ‘cheat sheets’ for tricky items, and pairing peers to review each other’s first five assessments.

- Appoint a HoNOS data steward – Give them licence to chase, coach and celebrate progress.

Improving HoNOS data may not be as glamorous as launching a new clinical program, but it silently underwrites every strategic goal, from safer care to sustainable funding. Get the data right, and better outcomes follow.

Need a deeper dive? If your team is wrestling with HoNOS priority order, managing contacts across service settings or weaving outcome measures into funding logic, let’s compare notes. Connect with me on LinkedIn and we’ll turn those red bars green together—because cleaner data means clearer decisions for the people who count on us.

References and further reading

- Australian Mental Health Outcomes & Classification Network (AMHOCN), National Outcomes and Casemix Collection Technical Specifications (v3.00), 2024.

- Independent Health and Aged Care Pricing Authority, Activity-Based Funding: Mental Health Care NBEDS 2024-25 – Technical Specifications.

- Victorian Department of Health, Mental Health Performance Reports, latest release.

- Burgess P. et al., “Assessing the Content Validity of the HoNOS 2018”, International Journal of Environmental Research and Public Health, 2022.

Written by Bernard Herrok, proofed by AI.

Contact us

.png)